Inside Indiana University's $40 million Data Center

If Bloomington gets hit by a nuclear strike, Oncourse will still be running after the fallout clears. At least, that was the impression I got when I toured the IU Data Center earlier this week. Located on the UITS campus at 10th St and the 45/46 bypass, IU's IT infrastructure hub is located in a beige, single-story facility with only a single set of windows on the whole building. The subdued appearance of the building hides the fact that it has 18-inch thick reinforced concrete walls and can withstand a hit from an F5 tornado. And once you go past the card-access front door, there's some very impressive IT and network engineering to be seen inside.

I was honestly really excited for my tour when I received an invite about a week ago. I'm going out on a limb here, but I'm guessing that being excited about a brand new data center is a phenomenon strictly limited to IT professionals. Seeing endless rows of server racks, perfectly routed bundles of fiber optic cables, and all the high performance electrical and plumbing work that goes into keeping the cogs of the Information Age spinning is just damn impressive, especially when you understand the work that goes into keeping it all online.

So that's why I took a few pictures, asked a few questions, and put together an article highlighting this 90,000 sq ft facility that handles IU's Netflix traffic, serves up their free software downloads, and does a few other things too.

Click on any of the pictures below to view them full-size:

This is the inconspicuous main entrance to the Data Center. It's the only area with windows on the whole building.

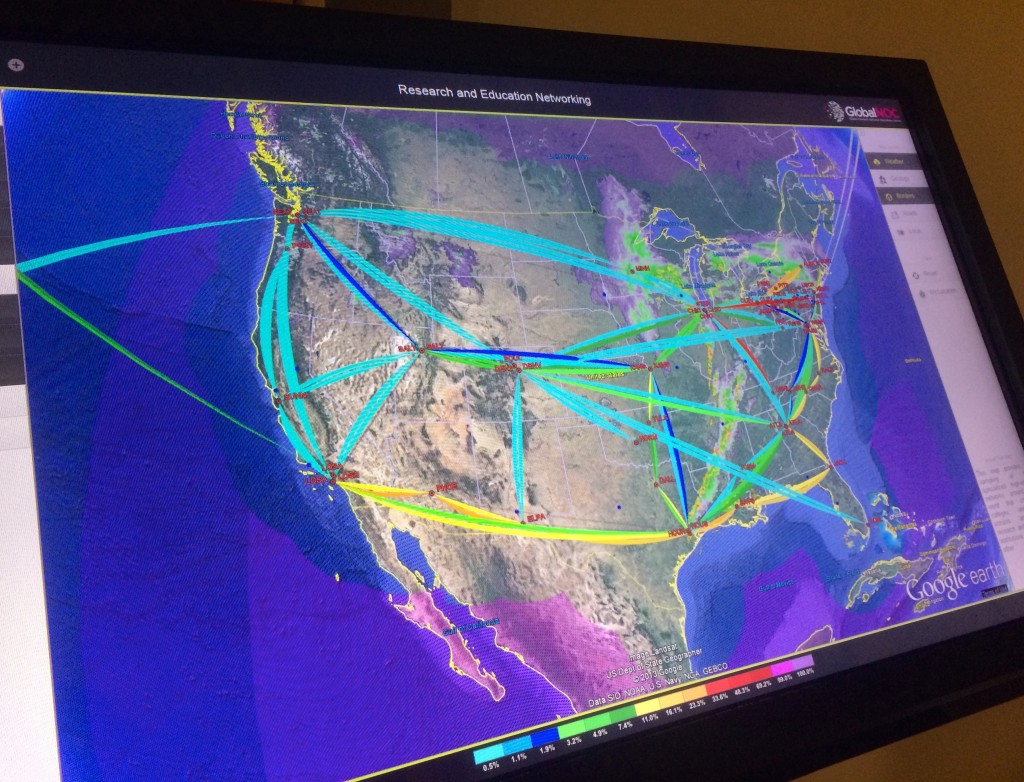

Here's one of the neat data visualization tools they had in the main lobby, showing the Internet2 academic fiber backbone. The colors represent percentages of each "pipe" that is being used. There's also a weather overlay displayed.

Here are two long rows of server racks in one of the main server pods. Each rack holds multiple computers, as well as sometimes running thousands of virtualized instances of servers.

Here is a row of servers provided by clients which are colocated to the Data Center. There servers contain sensitive data, so they can only be accessed by the client using the RFID locks on each rack (the red lights).

In the back of the Data Center are two 16-cylinder, 2,200HP Cummins diesel generators. The Data Center has about 10,000 gallons of diesel fuel on hand at all times, meaning that they can run critical systems off of a generator for 7 days without refueling.

Here's a look into the flywheel room. The flywheels in these boxes spin 24x7 whenever the main power is on. If the power suddenly gets cut, they'll continue to spin a generator, providing power to the servers for about 20 seconds. This gives the generators time to start up, since they take about 9 seconds to get going.

This room contains a lot of lead acid backup batteries, as you can see. While the critical systems would switch to the generators in a time of emergency, non-critical servers would switch over to these. This then gives the IT staff time to save everything and shut things down properly.

This room of green, Super Mario style pipes is the heart of the chilled water cooling system. The Data Center also has a massive, underground water tank in case the city water line were to be cut.

All that heat has to go somewhere, so water gets pumped out to these huge cooling towers outside.

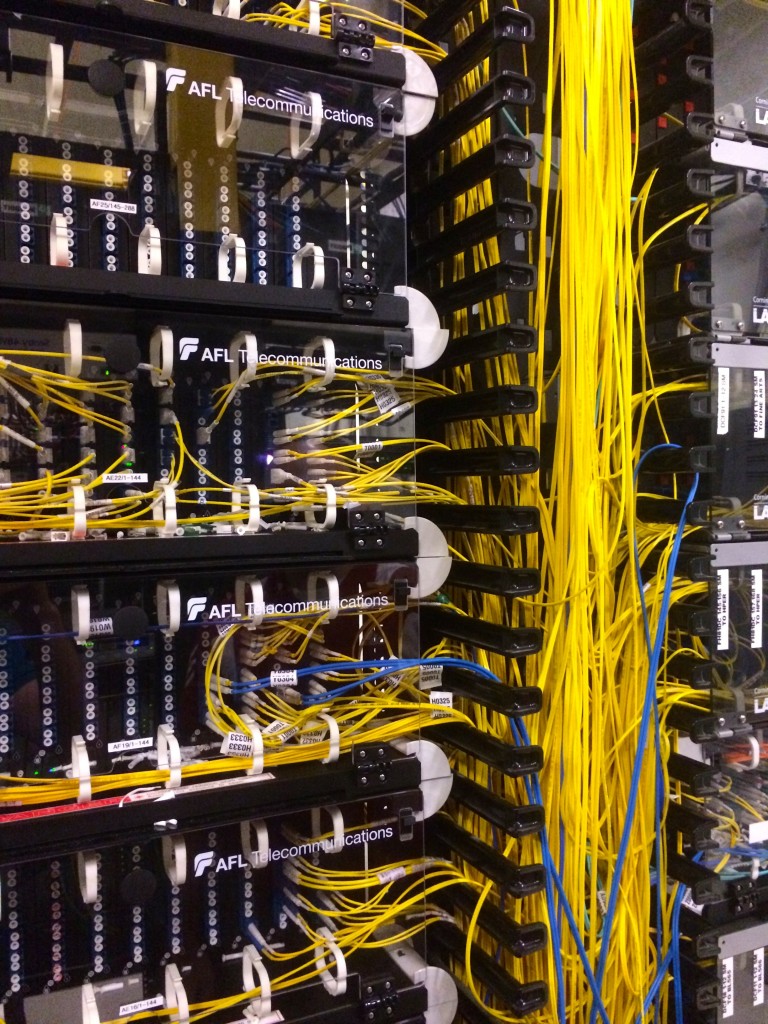

Here's where the magic behind that 100Mbps IU Internet happens. The yellow wires you see there are fiber optic cables. Each pair of cables can transmit about 10Gbps of data, and it's all plugged in to some very expensive network equipment. A few of these wires run all the way from Bloomington to Indianapolis, through which lasers transmit data at distances of 50+ miles.

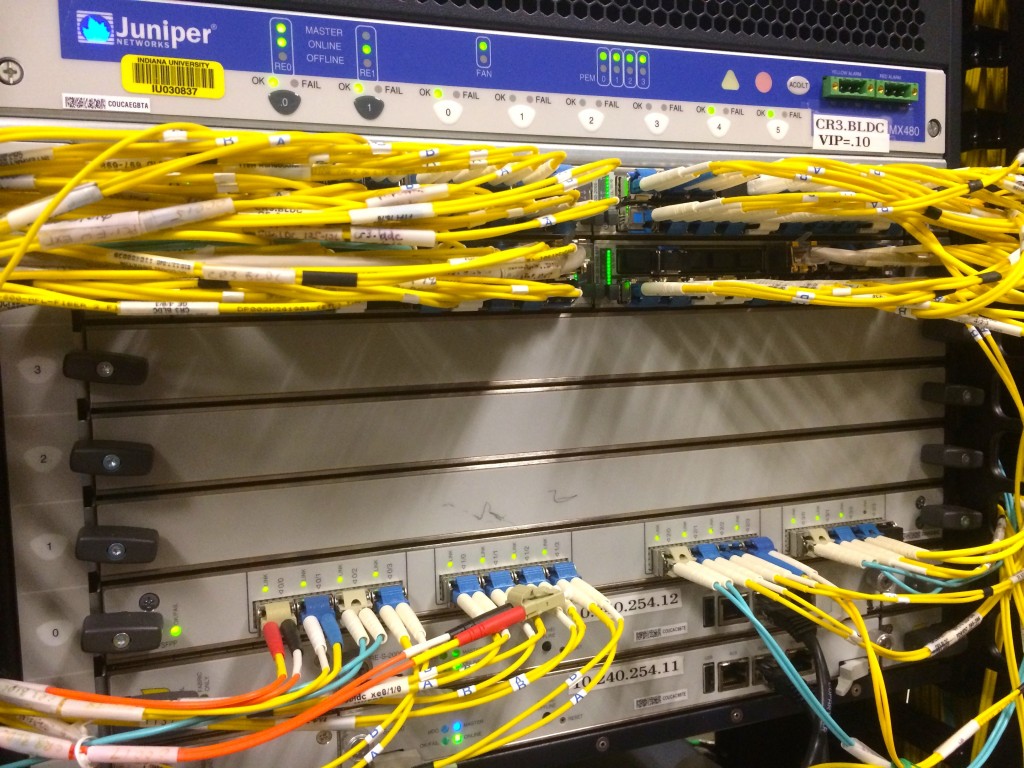

Here's a shot of some very expensive, ISP-class Juniper networking equipment. This is similar to what AT&T or Comcast might use to route traffic from several different neighborhood boxes.

Here's a placard for the crown jewel of the IU Data Center, Big Red II. This was the fastest university-owned supercomputer at the time of its assembly in 2013.

Here's a shot of the full row of Big Red II server racks, complete with a custom cooling system at the end.

It was a lot of fun seeing all this expensive hardware up close, and it makes you appreciate all the amazing, 21st-century engineering that goes into making everyday technology work. Hopefully you enjoyed this virtual tour as much as I enjoyed the real thing.

|

Older:

Breaking - Mt. Gox is dead, long live Mt. Gox |

Newer:

Level 3 Communications and the perpetual game of ISP "Chicken" |